Tools Developed

RSIM x86

RSIM x86 is a port of the widely used RSIM performance simulator for cc-NUMA multiprocessors to GNU/Linux and x86 hardware.

Introduction

Doing research or system design in computer architecture involves deciding among many interrelated tradeoffs. Computer architecture is increasingly driven by quantitative data. Usually, developers can devise analytical models to bound the design space in the very early development stages but the interactions between many design decisions in today increasingly complex systems make impossible to use these analytical models to accurately predict the performance of a finished system. Hence, we need experimental models in order to guess the performance impact of a possible design decision before building a finished system.

Performance simulators are complex software systems which accurately model the behavior of a hardware system.

RSIM is a simulator primarily targeted to study shared-memory cache coherent (cc-NUMA) multiprocessor architectures built from processors that aggressively exploit instruction-level parallelism (ILP).

RSIM key advantage is that it models a system comprised by several out-of-order processors which aggressively exploit instruction level parallelism (ILP). The model includes an aggressive memory system and a scalable interconnection network. Using detailed ILP models for the simulated processors provides a realistic approximation to modern and future multiprocessor systems. RSIM provides a great flexibility which allows using it to simulate a range of systems from monoprocessors to different cc-NUMA configurations.

Advantages of our port

We have ported RSIM to GNU/Linux running on x86 hardware to obtain an increased performance for our simulations at a fraction of the original cost.

The purpose of our port of RSIM is to allow us to use our research resources more efficiently. Prior to the port, the small number of available machines to develop and run our simulations created long waiting queues and serious organizational problems.

Using a RSIM version which runs on cheap and readily available x86 hardware allows us to provide each researcher with its own workstation to comfortably develop and test his experiments and use an inexpensive cluster of Linux/x86 machines to execute the longest simulations. The x86 version not only executes each benchmark faster, but more importantly, it is easier to provide more resources to increase the throughput of the whole team.

Problems porting RSIM

RSIM is an interpreter for Solaris/SPARC v9 application executables. Internally, RSIM is a discrete event-driven simulator based on the YACSIM (Yet Another C Simulator) library from the Rice Parallel Processing Testbed (RPPT).

RSIM is written in a modular fashion using C++ and C for extensibility and portability. Initially, it was developed using Sun systems (Solaris 2.5) on SPARC. It has successfully ported to HP-UX 10 running on a Convex Exemplar and to IRIX running on MIPS. However, porting it to 64-bit or little-endian architectures requires significant additional effort.

We have successfully ported RSIM to GNU/Linux running on x86 architectures. The main problems that we have had to solve were:

- Build issues due to differences in libraries and headers between Solaris and Linux.

- Byte Ordering Issues.

- System call interface differences.

- Floating point incompatibilities.

For a more detailed explanation of these issues and how they were solved, please refer to the paper describing RSIM-x86.

Evaluation

We have extensively tested our port to ensure that it can get exactly the same results than the original RSIM. Also, the purpose of our evaluation is to check if using the ported version of RSIM is a cost-effective solution to perform the great number of long running simulations needed for our research.

Firstly, we compare the execution speed of RSIM running in several different architectures. Secondly, since the execution time of a single benchmark is not the most valuable metric for our purposes, we define a better indicator of the usefulness of each simulation platform and version of RSIM. We will measure which version allows us to utilize our computing resources more efficiently in terms of hardware cost and execution time. We will use a metric based in the normalized number of simulations per hour per thousand euros.

We have measured the impact that the actual benchmark being simulated has in the speedup obtained by our port and have found that it is small, but not inexistent. Hence, we have chosen a small set of representative benchmarks from the SPLASH suite to perform our experiments.

We have also measured the impact that varying the problem size of the simulated benchmarks has in the achieved speedup and have found that it is very small once a certain threshold is reached. Other simulator parameters, like the number of processors, have a very small influence too. Hence, we have chosen medium problem sizes and we use two processors and default values for the rest of the parameters for our experiments.

We have evaluated the speed of running our port of RSIM in the following machines:

- A high-end Solaris/SPARC Sunblade-2000 system: SPARC-1.

- A low-end Solaris/SPARC Sunblade-100 system: SPARC-2.

- A high-end Linux/Athlon64 SMP system (running in legacy IA-32 mode): X86-64.

- A high-end Linux/Xeon SMP system: XEON.

- A low-end Linux/Pentium-IV system: P-IV.

The relevant characteristics and price of each machine is shown in the following table. The prices indicated for the machines are necessarily approximate. These are the approximate prices that those systems would cost as of January 2005 in Spain.

Table 1: Characteristics of evaluated configurations.

| SPARC-1 | SPARC-2 | X86-64 | XEON | P-IV | |

|---|---|---|---|---|---|

| Processor | UltraSPARC-III | UltraSPARC-III | AMD Opteron | Intel Xeon | Intel Pentium-IV |

| No. of processors | 1 | 1 | 2 | 2 | 1 |

| Frequency | 1015 MHz | 650 MHz | 1791 MHz | 2 GHz | 3 GHz |

| RAM Memory | 2GB | 256 MB | 1GB | 1GB | 1GB |

| L2 Cache | 8MB | 512 KB | 1024 MB | 512 KB | 1024 KB |

| Price | 5000 | 1800 | 3000 | 2600 | 600 |

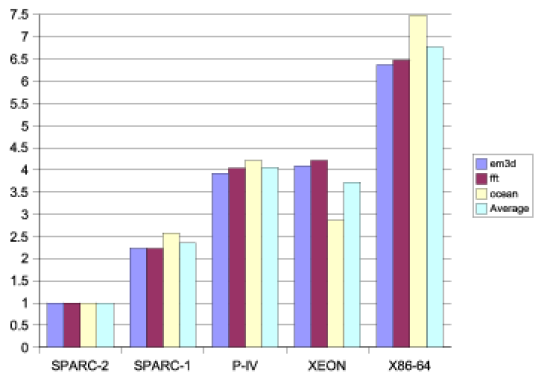

In figure 1 we show the normalized throughput time of our set of benchmarks for each architecture. In other words, we show the throughput speedup of each machine compared with the slowest one (SPARC-2).

Figure 1: Normalized throughput per processors for each architecture. Normalized throughput per processors for each architecture

As some of our machines are SMP systems with two processors. In those cases, we can run two instances of RSIM simultaneously effectively doubling the throughput. Since the simulation work is CPU limited with very little IO and modest memory requirements there is no contention between the two independent processes.

In figure 2 we show the average number of simulations per hour per thousand euros achieved for each platform. When we account for the price of each machine and the number of processors, we see that the cheapest platform is the best option for efficiently take advantage of any given budget. Also, the easy availability of these kind of machines make them an even more attractive alternative to the expensive Solaris/SPARC machines used until now to run simulations based on RSIM.

Figure 2: Average number of simulations per hour per thousand euro. Average number of simulations per hour per thousand euro

Download

Licensing

This distribution includes the RSIM-x86 Simulator, RSIM Applications Library, Example Applications ported to RSIM, RSIM Utilities, and the RSIM Reference Manual.

The RSIM-x86 Simulator and RSIM Utilities are available under the University of Illinois/NCSA open source license agreement. Please, read the disclaimer included in the license.

The RSIM Applications Library is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License.

Current version

The current version of RSIM x86 is 2.2, released the 29th April 2005.

The following packages are available:

- Source tarball (includes precompiled binary for i386).

Documentation

The following documentation is available:

- Paper describing and evaluating RSIM-x86: Ricardo Fernandez and José M. García. RSIM x86: A cost-effective performance simulator. In 19th European Conference on Model ling and Simulation, pages 774-779, Riga, Latvia, June 2005. European Council for Modelling and Simulation. (bibtext entry).

Dependencies

In order to build RSIM x86, the following software is needed:

- GCC version 3.3 or greater.

- GNU make.

- Libelf (packages elfutils-libelf and elfutils-libelf-devel in Fedora Core).

Benchmarks

We provide some benchmarks precompiled for RSIM. These benchmarks are provided as is.

Acknowledgements

This work has been supported by the Spanish Ministry of Ciencia y Tecnología and the European Union (Feder Funds) under grant TIC2003-08154-C06-03, and by fellowship 01090/FPI/04 from the Comunidad Autónoma de la Región de Murcia (Fundación Séneca, Agencia Regional de Ciencia y Tecnología).

Virtual GEMS

Virtual-GEMS is a simulation infrastructure that enables to easily simulate a virtualized server. Virtual-GEMS is based on the Simics and GEMS simulators. It provides full-system virtualization, in which each virtual machine runs its own operating system. Virtual-GEMS simulates each virtual machine with a Simics process, and executes as many Simics processes as virtual machines we want to run in the simulated server. Each virtual machine executes its own operating system and workload. The operating systems and workloads may be different for each VM. In order to get a single performance point of view of the whole server, a single GEMS instance is used to simulate the timing of all virtual machines. To achieve that, Virtual-GEMS uses an interface that connects the Simics processes simulating the VMs with the same GEMS process simulating the timing of the whole system.

The main appeal of Virtual-GEMS is the ease of configuration of the simulations of virtualized systems. Virtual-GEMS uses ordinary system checkpoints of Simics to create the virtual machines. This way, it avoids the necessity to create complex checkpoints including the hypervisor and the images of the virtual machines to simulate. Instead, a single Simics checkpoint can be used to simulate as many virtual machines as desired of that particular SO and workload. Different Simics checkpoints can be mixed in the same simulation to create virtualized systems with heterogeneous virtual machines.

Virtual-GEMS also enables the configuration of the mapping of the resources of the virtual machines to the resources in the physical machine. In particular, the processors and L2 cache banks of the system that are used by each virtual machine can be modified by setting a configuration parameter of Virtual-GEMS.

Virtual-GEMS does not simulate a software virtualization layer, but virtualization is rather managed by hardware.

Installation

Prerequisites

- We recommend the use of gcc 3.4.1

- We recommend the use of Simics 2.2.19

- We recommend the use of GEMS 2.1 (02/28/2008)

Files

Installation Steps

Patching GEMS

- Uncompress the GEMS tar

- Patch the GEMS directory just created, lets call it

$GEMS, with the following command:

cd $GEMS patch -p1 < vg.diff

- Make these files executable:

chmod u+x $GEMS/simics-scripts/vgems.sh chmod u+x $GEMS/simics-scripts/rrrun-workload

- Configure GEMS by following the steps indicated in this page: http://www.cs.wisc.edu/gems/doc/gems-wiki/moin.cgi/Setup

mi-device installation

- Once Simics 2.2.19 has been installed in a folder into the GEMS folder, as shown in http://www.cs.wisc.edu/gems/doc/gems-wiki/moin.cgi/Setup , untar the file mi-device.tar.gz in

$GEMS/simics:

cd $GEMS/simics tar zxf mi-device.tar.gz

- Insert the following line in

$GEMS/simics/config/modules.list-local:

mi-device | API_2.0 | mygroup

- Compile mi-device with:

cd $GEMS/simics/amd64-linux/lib gmake mi-device

Use of the scripts

One simulation involves the execution of one GEMS instance (remote_ruby.exec) and one simics instance per virtual machine. The scripts that launch the simulation are located in the folder $GEMS/simics-scripts.

workloads.py

This file contains the workloads to use. In checkpoints_path is the path to your checkpoints. In workload_templates you must insert the workloads you want to use. Each workload requires its name, the file name of its checkpoint, and the number of transactions.

If checkpoints_path is xxx and the checkpoint filename is yyy, then the checkpoint files for that workload must be placed in the folder xxx/yyy, and the full path to the checkpoint main file must be xxx/yyy/yyy.checkpoint

vgems.sh

BENCHMARKS: benchmarks to use and workloads to use in each benchmark. You can use the name of your benchmarks configured in the variable workload_templates in workloads.py. The benchmarks are separated by spaces, and the workloads in a particular benchmark are separated by commas. For example:

BENCHMARKS="apache4p,jbb4p"

means two simulations. The first one with one apache virtual machine and one jbb4p virtual machine. The second one with one oltp virtual machine and one zeus virtual machine.

NVM: number of virtual machines in each simulation. The NVM value corresponding to the previous BENCHMARK variable is: NMV=2.

NPROC_RUBY: number of processors in GEMS in each simulation. The NPROC_RUBY value corresponding to the previous BENCHMARK variable, assuming that each VM has 4 processors, is: NPROC_RUBY=8

The number of processors in Ruby must be power of two.

Finally, we have to configure the architecture parameters in the RUBY_CONFIG variable. This variable is a string with Ruby parameters. The default options are specified in $GEMS/ruby/config/rubyconfig.defaults, any of these can be overriden. Some parameters have been included with Virtual-GEMS. They are the following:

STATIC_CACHE_BANK_DIRECTORY_MAPPING: Use VMCBM mapping (see Virtual-GEMS paper). PER_PROC_STATIC_CACHE_BANK_DIRECTORY_MAPPING: Use TCBM mapping. Simple mapping is used by default. CUSTOM_VM_PROCESSOR_MAPPING: A string with the mapping of physical processors to virtual machines. For example: "0-0-1-1" gives the first two processors in the system to VM 0 and the last two processors to VM 1. CUSTOM_VM_L2BANK_MAPPING: The same than CUSTOM_VM_PROCESSOR_MAPPING but for L2 slices

Execution

- To launch simulations, set the environment variable SIMICS_EXTRA_LIB to ./modules

- Add in

~/.bash_profilethe following lines:

SIMICS_EXTRA_LIB=./modules export SIMICS_EXTRA_LIB

- Compile the protocol to use:

cd $GEMS/ruby make PROTOCOL=MOESI_CMP_directory DESTINATION=MOESI_CMP_directory

- Now you can execute the script from the home directory to run a simulation with Virtual-GEMS:

cd $GEMS/simics/home/MOESI_CMP_directory mkdir results ../../../simics-scripts/vgems.sh result

In the output folder a new subfolder for the simulation executed will be created. Inside that subfolder, GEMS and each virtual machine will have their own output files. The stats of the simulation are printed each time a virtual machine ends its execution. Now you can use vgems.sh to launch the simulations.

MURCIA

Introduction

Bioinformatics methods can considerably aid clinical research, providing very useful insights and predictions working at the molecular level in fields like Virtual Screening, Molecular Dynamics and Protein Folding. However, the successful application of such methods is drastically limited by the computational resources required, particularly, whenever they deal with accurate biophysical models. A leading example of this computational issue is the calculation of the solvent accessible surface area (SASA).

We present a novel method called MURCIA (Molecular Unburied Rapid Calculation of Individual Areas) that takes advantage of the last generation of massively parallel Graphics Processing Units (GPUs) to considerably enhance SASA calculations. Up to the moment, MURCIA is one of the fastest methods available in a wide range of molecular sizes (tested with up to millions of atoms), and also provide a good framework for the acceleration of the visualization of molecular surfaces in standard molecular graphics programs.

Installation

Prerequisites

- You need a NVIDIA CUDA capable device in order to execute MURCIA.

- You must have the NVIDIA driver for your graphic card installed.

- You must have NVIDIA CUDA Toolkit installed.

- You must have NVIDIA CUDA SDK installed under your home folder:

$(HOME)/NVIDIA_GPU_Computing_SDK. - In http://developer.nvidia.com/cuda-downloads, you can download the packages you need.

- Please visit NVIDIA CUDA GPU Computing documentation in:

- CUDA Getting Started Guide (Widows/Linux/Mac OS X)

and follow its installation instructions.

Once you have CUDA installed, just go to the main MURCIA folder and type “make”.

The MURCIA executable will be placed in .bin/murcia.

Download files

The files will be soon available for download (22/11/2011).

Acknowledgements

This research was supported by the Fundación Séneca (Agencia Regional de Ciencia y Tecnología, Región de Murcía) under grants 00001/CS/2007 and 15290/PI/2010, by the Spanish MEC and European Commission FEDER under grants CSD2006-00046 and TIN2009-14475-C04 and a postdoctoral contract from the University of MURCIA (30th December 2010 resolution).